When choosing a small language model, the main indicator you have is the parameter size. In the Lama suite supported by ATōMIZER, we have access to a 1B, 3B, or 8B parameter Llama model.

These Billion parameters are calculated from several aspects of the model, but the easiest way to think about it when choosing a model is how complex is my task? Is there a lot of reasoning that goes into this task? If so, I might want more parameters to give the model more aspects of a problem to consider.

Weights within a model make some words more influential than others in the prompt/question.

If we ask a 1B model:

“What is the capital of France?”

It can note that you are interested in a location (capital) and a specific one (France) and point to an answer for you (Paris) fairly easily. There is one simple lookup task going on.

If we were to create a more complex question like:

“What is the capital letter of the capital of the following country: France?”

Now a bit more internal work needs to be done. First the LLM may think we are looking for a place again, but then the word letter tells it no the capital refers to characters in a word, so it may want to group that we care about capital letters, and while we are looking for those, that is not important until after we find the capital city of the country. You can see it involves several steps that depend on each other. The more complex the task the more parameters we need to help sort out and learn the complexity.

If we were to then make our prompt even more complex, paste an entire entry from a wikipedia page and an encyclopedia into the prompt and then say:

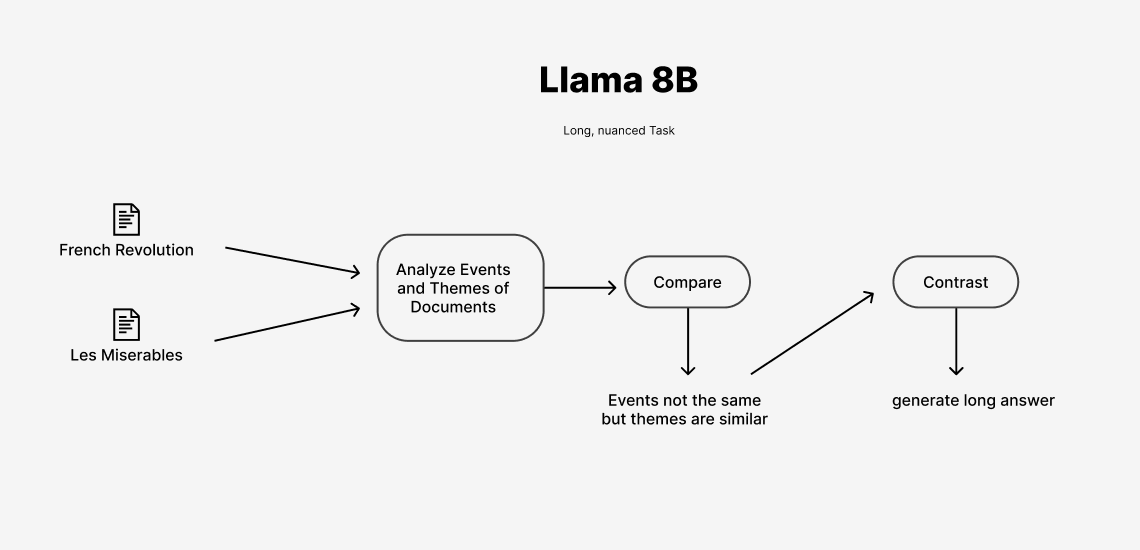

“Analyze the events and themes, then compare and contrast the French revolution with the events in Les Miserable”

Now a model has to read all the text we gave it see that Les Miserables happens 16 years after the Revolution so we must not be talking about comparing facts and it must be more themes and then it needs to recall the themes in both of the long texts you gave it and there is a lot of looking back and forth and reasoning over a very long context which the 8B model can handle more easily than 1B or 3B.

Consider the size and complexity of your task when choosing a model. Shorter context prompts with less reasoning can work in the smallest model, slightly more complicated reasoning tasks can work in the medium sized models, while large contexts which by their nature require complex reasoning will be best suited for the larger models.

Short and simple = 1B

Moderately complex, a few steps or constraints = 3B

Long, nuanced, or synthesis-heavy = 8B